Last week, OpenAI unveiled its latest AI model, o1, after a wave of speculation involving different post-GPT4 models with cryptic names including “Strawberry,” “Orion,” arguably “Q*,” and the obvious “GPT-5.” This new offering promises to push the boundaries of artificial intelligence with enhanced reasoning capabilities and scientific problem-solving prowess.

Developers, cybersecurity experts, and AI enthusiasts are abuzz with speculation about o1’s potential impact. In general, enthusiasts have praised it as a significant step forward in AI evolution, while others—more familiarized with the model’s insights—have advised us to tame our expectations. OpenAI’s Joanne Jang made it clear from the beginning that o1 “isn’t a miracle model.”

🍓 there’s a lot of o1 hype on my feed, so i’m worried that it might be setting the wrong expectations

what o1 is: the first reasoning model that shines in really hard tasks, and it’ll only get better. (i’m personally psyched about the model’s potential & trajectory!)

what o1…

— Joanne Jang (@joannejang) September 12, 2024

At its core, o1 is the product of a concerted effort to tackle problems that have traditionally stumped AI systems, especially with inaccurate prompts that lack specificity. From complex coding challenges to intricate mathematical computations, this new model aims to outperform its predecessors—and in some cases, even human experts—particularly at tasks that require complex reasoning.

But it’s not perfect. OpenAI o1 grapples with performance issues, versatility challenges, and potential ethical quandaries that may make you think twice before choosing it as your new default model in the vast pool of LLMs to try.

We have been playing a little bit with o1-preview and o1 mini—maybe too little, since it only allows for 30 or 50 interactions per week, depending on model—and we have come up with a list of the things we like, hate, and are concerned about regarding this model.

The Good

Logical thinking

o1’s problem-solving capabilities stand out as its crowning achievement. OpenAI argues that o1 often surpasses human PhD-level performance in solving specific problems, mainly in fields like biology and physics, where precision is paramount. This makes it an invaluable tool for researchers grappling with complex scientific questions or large amounts of complex data.

Zero-shot Chain of Thought

One of o1’s most intriguing features is its “Chain of Thought” processing method. This approach allows the AI to break down complicated tasks into smaller, more manageable steps, analyzing the potential consequences of each step before determining the best outcome.

It’s akin to watching a chess grandmaster dissect a game, move by move, or going through a reasoning session before making a decision.

TL;DR: o1’s logical and reasoning capabilities are outstanding.

OpenAI’s new o1 model is a BIG breakthrough in AI intelligence, if IQ tests say anything.

I gave it the Norway Mensa IQ test, and it blows other AIs out of the water.

I’m surprised!… Because there hadn’t been public progress in the last 6mo.

Link to full analysis below: pic.twitter.com/bRgdxvLkV1

— Maxim Lott (@maximlott) September 14, 2024

Coding and analysis

OpenAI o1 is particularly good when it comes to programming. From education to real-time code debugging and scientific research, o1 adapts to a wide range of professional applications.

OpenAI paid special attention to o1’s coding capabilities, making the model more powerful than its predecessors, and more versatile at understanding what users want before translating tasks into code.

Other models are good at coding, but applying Chain of Thought to a coding session makes the whole process more productive, and the model is capable of executing more complex tasks.

Jailbreak protection

OpenAI hasn’t overlooked the critical issue of ethics in AI development. The AI giant equipped o1 with built-in filtering systems designed to prevent harmful outputs. This may be seen as an artificial and unnecessary constraint by some, but for big businesses having to deal with the consequences and responsibilities of what an AI does in their name, they may be keen on safer model that doesn’t recommend that people die, doesn’t produce illegal content, and is less prone to being tricked into proposing or accepting deals that may result in financial losses.

OpenAI claims that the model is designed to resist “jailbreaking,” or attempts to bypass its ethical constraints—a feature that’s likely to resonate with security-conscious users. But we already know their beta efforts were not good enough, though perhaps the official release candidate will do better.

The Bad

OpenAI O1 is not good at all. Not a new model, fine-tuned maybe. Slow, uses a crazy number of tokens and it’s not as useful as having an agent or straight up combing rag with agent with long term memory (sqlite)

— Daniel Merja (@danielmerja) September 12, 2024

It’s slooooow

The model exhibits sluggish performance, especially when compared to its speedier cousin, GPT-4o. In fact, the “mini” versions were specifically designed to be fast. This lack of responsiveness makes o1 less than ideal for tasks that demand rapid-fire interactions or high-pressure environments.

Of course, the more powerful a model is, the more computing time it will take. However, part of this sluggish experience is due to the embedded Chain of Thought reasoning process that it has to go through before providing an answer. The model “thinks” for around 10 seconds before starting to write its answers. In our tests, some tasks have taken the model more than a minute of “thought time” before answering.

Then, add another bit of time for the model to write a huge and long chain of thought process before giving you the simplest answer in the world. Not good, if patience isn’t your strongest suit.

It’s not multimodal —yet

o1 also comes with a relatively stripped-down feature set. It’s missing some functionality that developers have come to rely on in models like GPT-4. These absent features include memory functions, file upload capabilities, data analysis tools, and web browsing abilities. For tech professionals accustomed to a fully-loaded AI toolkit, working with o1 might feel like downgrading from a Swiss Army knife to something like a standard blade.

Also, those using ChatGPT for more creative purposes are also locked out of the model’s true potential. If you need DALL-E, OpenAI o1 won’t work, and the powerful GPTs cannot benefit from using this powerful model either. It’s text-only for now.

OpenAI promised to integrate those functions in the future, but as it stands today, a text-only chatbot may not be the best option for most of its users. A text LLM only prompts what the image generator creates. Not integrating DALL-E with OpenAI o1 seems like a step back in terms of versatility.

It sucks with creativity

Yes, o1 is great at reasoning, coding, and doing complex logical tasks. But ask it to create a novel, improve a literary text, or proofread a creative story and it will fall short.

OpenAI acknowledges this, and even in ChatGPT’s main UI, it says GPT-4 is better for complex tasks and o1 is best for advanced reasoning. As such, its groundbreaking model is weaker than the previous generation when users require a more “generalist” approach. This makes o1 feel like less of an overwhelming leap forward than Sam Altman promised, in which GPT-4 would suck when compared against its successor.

The Ugly

OpenAI’s o1 model scored ‘Low’ in cybersecurity and model autonomy risks but ‘Medium’ in persuasion.

With AI influencing more decisions, where do we draw the line between helpful and manipulative? pic.twitter.com/bL1R5QkMnn

— Keshav Thakur (@Keshav__Thakur_) September 14, 2024

It’s expensive by design

Resource hunger is a significant drawback of o1. Its impressive reasoning skills come at a cost—both financial and environmental. The model’s appetite for processing power drives up operational expense and energy consumption. This could potentially price out smaller developers and organizations, limiting o1’s accessibility to those with deep pockets and robust infrastructure.

Remember, this model executes on-inference Chain of Thought, and the tokens outputted by the model before providing a useful answer are not on the house—you pay for them.

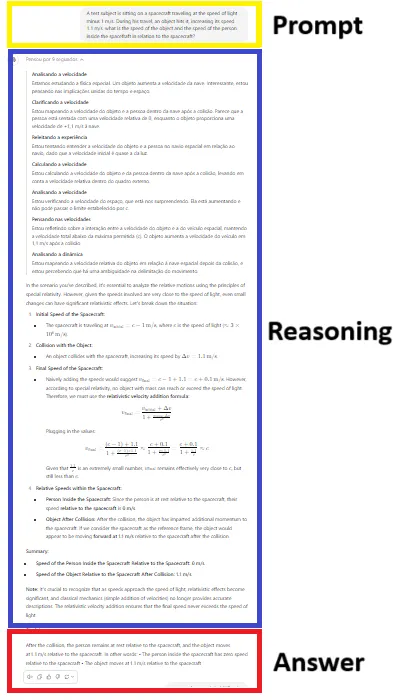

The model is designed to make you pay—a lot. For example, we asked o1: “A test subject is sitting on a spacecraft traveling at the speed of light minus 1 m/s. During his travel, an object hits it, increasing its speed 1.1 m/s. What is the speed of the object and the speed of the person inside the spacecraft in relation to the spacecraft?”

It generated 1,184 tokens of thinking tokens for a 68-token final conclusion —which was incorrect, by the way.

Inconsistencies are likely

Another concerning point is its inconsistent performance, particularly in creative problem-solving tasks. The model has been known to generate a series of incorrect or irrelevant answers before finally arriving at the correct solution.

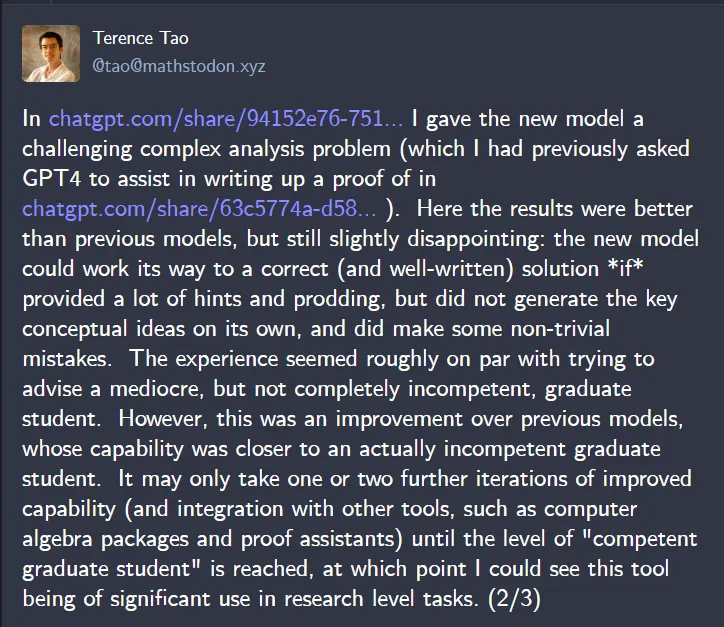

For example, renowned mathematician Terrence Tao said, “The experience seemed roughly on par with trying to advise a mediocre, but not completely incompetent, graduate student.”

However, due to its convincing Chain of Thought—which, let’s be honest, you’ll probably skip to get to the actual answer fast—the little subtleties may be hard to spot. That’s especially true for those relying on it to solve problems, instead of those who just want to test or benchmark the model to see how good it is.

This unpredictability necessitates constant human oversight, potentially negating some of the efficiency gains promised by AI. It’s almost as if the model does not actually think, and instead was trained on a big dataset of problem-solving steps and knows how similar problems were solved before, applying the same patterns in its internal Chain of Thought.

So no, AGI is not upon us —but, let’s be clear, this is as close as we have gotten.

OpenAI is playing Big Brother

This is another pretty concerning point to consider for those who value their privacy deeply. Ever since the Sam Altman drama, OpenAI has been known for steering its path toward a more corporate approach, caring less about safety and more about profitability.

The entire super alignment team was dissolved, the company started to make deals with the military, and now it is giving the government access to models before deployment as AI has quickly turned into a matter of national interest.

And lately, there have been reports that point to OpenAI closely watching what users prompt, manually evaluating their interactions with the model.

One notable example comes from Pliny—arguably the most popular LLM jailbreaker in the scene—who reported that OpenAI locked him out, preventing his prompts from being processed by o1.

Other users have also reported receiving emails from OpenAI after interacting with the model:

This is part of OpenAI’s normal actions to improve its models and safeguard its interests. “When you use our services, we collect personal information that is included in the input, file uploads, or feedback that you provide to our services (‘content’),” an official post reads.

If the prompts or data provided by clients is found to be against its terms of service, then OpenAI can take action to prevent this from happening again before terminating an account or reporting it to the authorities. Emails are part of those actions.

However, actively gatekeeping the model does not make it inherently more powerful at detecting and combating harmful prompts, and limits the model’s capabilities and potential as its usability is marked by OpenAI’s subjective ruling over which interactions can go through

Also, having a corporation monitoring its users’ interactions and uploaded data is hardly the best thing for privacy. It’s even worse considering how less prominent security has become for OpenAI, and how much closer it is now to government agencies.

But, who knows? We may be paranoid. After all, OpenAI promises that it respects a zero data retention policy—if enterprises ask for it.

There are two ways for non-enterprise users to limit what OpenAI collects. Under the configuration tab, users must click on the data control option and find a button named “Make this model better for everybody”—which they must turn off in order to prevent OpenAI from collecting data out of their private interactions.

Also, users can go to the personalization option and uncheck ChatGPT’s memory to prevent it from collecting data out of their conversations. These two options are turned on by default, giving OpenAI access to users’ data until the moment they opt out.

Is OpenAI o1 for you?

OpenAI’s o1 model represents a shift from the company’s previous approach of creating broadly applicable AI. Instead, o1 is tailored for professional and highly technical use cases, with OpenAI stating that it’s not meant to replace GPT-4 in all scenarios.

This specialized focus means o1 isn’t a one-size-fits-all solution. It’s not ideal for those seeking concise answers, purely factual responses, or creative writing assistance. Additionally, developers working with API credits should be cautious due to o1’s resource-intensive nature, which could lead to unexpected costs.

However, o1 could be a game-changer for work involving complex problem-solving and analytical thinking. Its ability to break down intricate problems methodically makes it excellent for tasks like analyzing complex data sets or solving multifaceted engineering problems.

It also simplifies workflows that previously required complex multi-shot prompting. For those dealing with challenging coding tasks, o1 offers significant potential in debugging, optimization, and code generation, although it’s positioned as an assistant rather than a replacement for human programmers.

Right now, OpenAI’s o1 is just a preview of things to come, but even prodigies have growing pains. The official model should be out this year, and it promises to be multimodal and quite impressive at every field, not just logical thinking.

“The upcoming AI model, likely to be called ‘GPT Next,’ will evolve nearly 100 times more than its predecessors, judging by past performance,” OpenAI Japan CEO Tadao Nagasaki said at the KDDI SUMMIT 2024 in Japan earlier this month. “Unlike traditional software, AI technology grows exponentially. Therefore, we want to support the creation of a world where AI is integrated as soon as possible.”

So it’s safe to expect fewer headaches and more “Aha!” moments from the final O1—assuming it doesn’t take too long to think about it.

Edited by Josh Quittner and Andrew Hayward

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

Jose Antonio Lanz

https://decrypt.co/249735/openais-o1-review-good-bad-ugly-ai-latest-brainchild

2024-09-17 19:45:59