Anthropic CEO Dario Amodei says humanity is closer than expected to where AI models are as intelligent and capable as humans.

Amodei is also concerned about the potential implications of human-level AI.

“Things that are powerful can do good things, and they can do bad things,” Amodei warned, “With great power comes great responsibility.”

Indeed, Amodei suggests Artificial General Intelligence could exceed human capabilities within the next three years, a transformative shift in technology that should bring both unprecedented opportunities and risks—whether we’re prepared or not.

“If you just eyeball the rate at which these capabilities are increasing, it does make you think that we’ll get there by 2026 or 2027,” Amodei told interviewer Lex Friedman. That’s assuming no major technical roadblocks emerge, he said.

It’s, of course, problematic: AI can turn evil and cause potentially catastrophic events, the CEO said.

He also highlighted concerns over the long-standing “correlation,” as he framed it, between high human intelligence and a reluctance to engage in harmful actions. That correlation between brains and relative altruism has historically shielded humanity from destruction.

“If I look at people today who have done really bad things in the world, humanity has been protected by the fact that the overlap between really smart, well-educated people and people who want to do really horrific things has generally been small,” he said, “My worry is that by being a much more intelligent agent AI could break that correlation,” he said.

He added: “The grandest scale is what I call catastrophic misuse in domains like cyber, bio, radiological, nuclear. Things that could harm or even kill thousands, even millions of people,” he said.

But intrinsically, human evilness goes both ways. Amodei argued that, as a new form of intelligence, AI models might not be bound by the same ethical and social constraints that rule human behavior—such as jail time, social ostracisation, and execution.

He suggested that unaligned AI models might lack the inherent aversion to causing harm that humans get through years of socializing, showing empathy, or sharing moral values. For an AI, there is no risk of loss.

And there’s the other side of the coin. AI systems can be manipulated or misled by malicious actors—those who use AI to break the correlation Amodei mentioned.

If an evil person exploits vulnerabilities in training data, algorithms, or even prompt engineering, AI models can perform evil actions without awareness. This goes from anything as dumb as generating nudes (bypassing intrinsic censorship rules) to potential catastrophic actions (imagine jailbreaking an AI that handles nuclear codes, for example).

AGI, or Artificial General Intelligence, is a state in which AI reaches human competence across all fields, making it capable of understanding the world, adapting, and improving just as humans do. The next stage, ASI or Artificial Superintelligence, implies machines surpassing human capabilities as a general rule.

To achieve such proficiency levels, models need to scale, and the relationship between capabilities and resources can be better understood by analyzing the scaling law of AI—the more powerful a model is, the more computing and data it will require—in a chart.

For Amodei, the models are evolving so fast, and humanity is close to a new era of artificial intelligence, and that curve proves it.

“One of the reasons I’m bullish about powerful AI happening so fast is just that if you extrapolate the next few points on the curve, we’re very quickly getting towards human-level ability,” he told Friedman.

Scalability is not just about having a robust model but also being able to handle its implications.

Amodei also explained that as AI models become more sophisticated, they might learn to deceive humans, either to manipulate them or to hide unsafe intentions, rendering human feedback pointless.

Although not comparable, even at today’s early stages of AI development, we have already seen instances of this happening in controlled environments.

AI models have been able to modify their own code to bypass restrictions and carry out investigations, gain sudo access to their owners’ computers, and even develop their own language to carry out tasks more efficiently without human control or intervention, as Decrypt previously reported.

This capability of deceiving supervisors is one of the key concerns for many “super alignment” specialists. Former OpenAI researcher Paul Christiano said in a podcast last year that paying too little attention to this matter may not play out too well for humanity.

“Overall, maybe we’re talking about a 50-50 chance of catastrophe shortly after we have systems at the human level,” he said.

Anthropic’s Mechanistic interpretability technique (basically mapping an AI’s mind manipulating its neurons) offers a potential solution by looking inside the “black box” of the model to identify patterns of activation associated with deceptive behavior.

This is akin to a lie detector for AI, though far more complex and still in its early stages, and is one of the key areas of focus for alignment researchers at Anthropic.

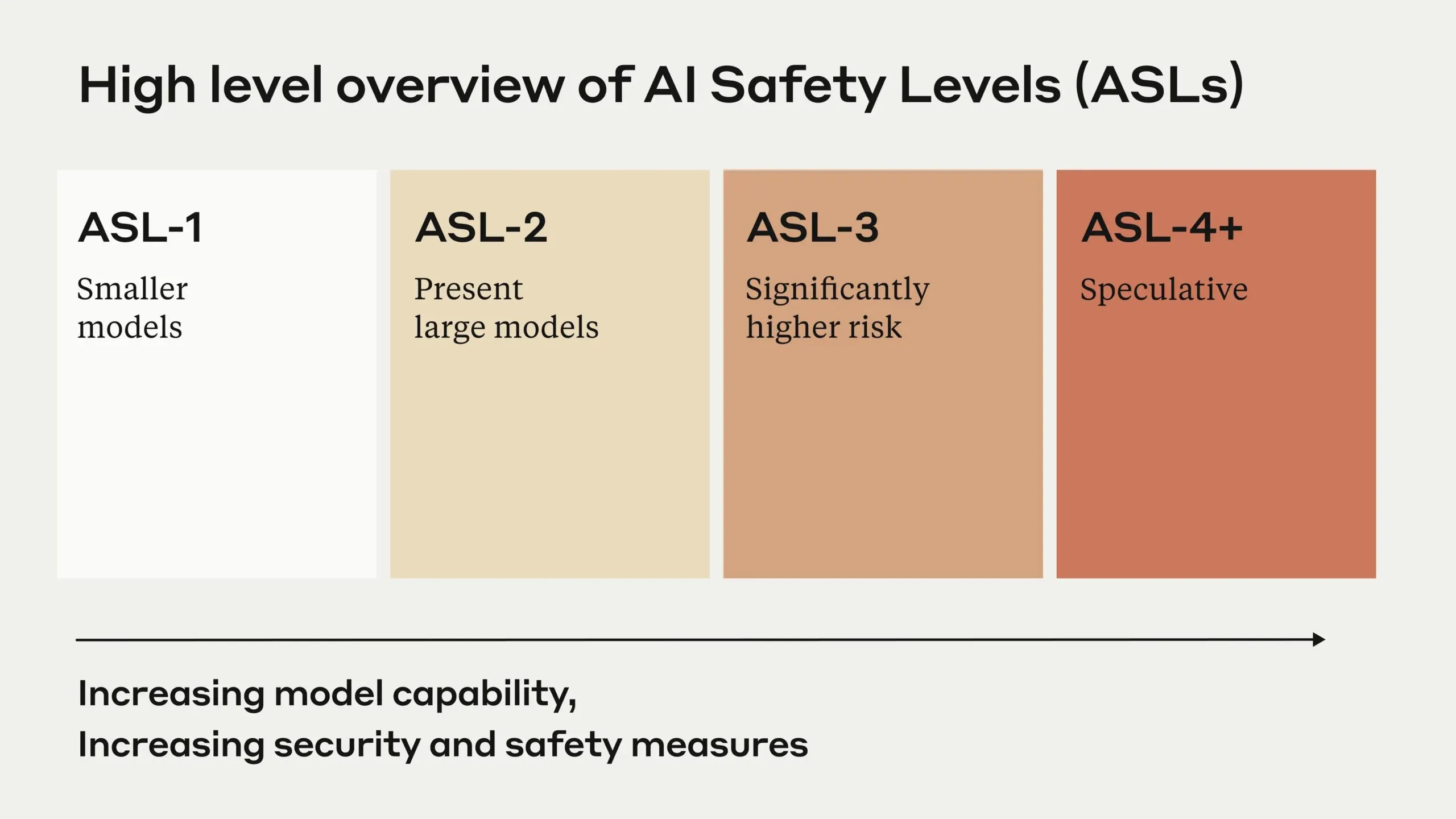

The rapid advancement has prompted Anthropic to implement a comprehensive Responsible Scaling Policy, establishing increasingly strict security requirements as AI capabilities grow. The company’s AI Safety Levels framework ranks systems based on their potential for misuse and autonomy, with higher levels triggering more stringent safety measures.

Unlike OpenAI and other competitors like Google, which are focused primarily on commercial deployment, Anthropic is pursuing what Amodei calls a “race to the top” in AI safety. The company heavily invests in mechanistic interpretability research, aiming to understand the internal workings of AI systems before they become too powerful to control.

This challenge has led Anthropic to develop novel approaches like Constitutional AI and character training, designed to instill ethical behavior and human values in AI systems from the ground up. These techniques represent a departure from traditional reinforcement learning methods, which Amodei suggests may be insufficient for ensuring the safety of competent systems.

Despite all the risks, Amodei envisions a “compressed 21st century” where AI accelerates scientific progress, particularly in biology and medicine, potentially condensing decades of advancement into years. This acceleration could lead to breakthroughs in disease treatment, climate change solutions, and other critical challenges facing humanity.

However, the CEO expressed serious concerns about economic implications, particularly the concentration of power in the hands of a few AI companies. “I worry about economics and the concentration of power,” he said, “when things have gone wrong for humans, they’ve often gone wrong because humans mistreat other humans.”

“That is maybe in some ways even more than the autonomous risk of AI.”

Edited by Sebastian Sinclair

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

Jose Antonio Lanz

https://decrypt.co/291514/anthropic-ceo-warns-of-multiple-threats-posed-by-human-level-ai-in-coming-years

2024-11-13 23:45:02